Artificial Intelligence is a key future technology. Our construction set promotes an early interest in this technology and prepares pupils for possible future vocational fields. They can immerse themselves in a playful way in the basic principles of AI and are given an insight into how AI technologies work. The set contains three models with different levels of difficulty. They illustrate the diversity of AI applications and offer a perfect introduction to this forward-looking technology. The scope of delivery includes instructional accompanying materials especially for use in the classroom, which deepen the understanding of Artificial Intelligence.

Time required

Introduction to the topic

Artificial intelligence has already found its way into many areas and plays an important role in our everyday life. An understanding and knowledge of machine learning and algorithms enables pupils to develop a responsible approach and an independent attitude to the topic.

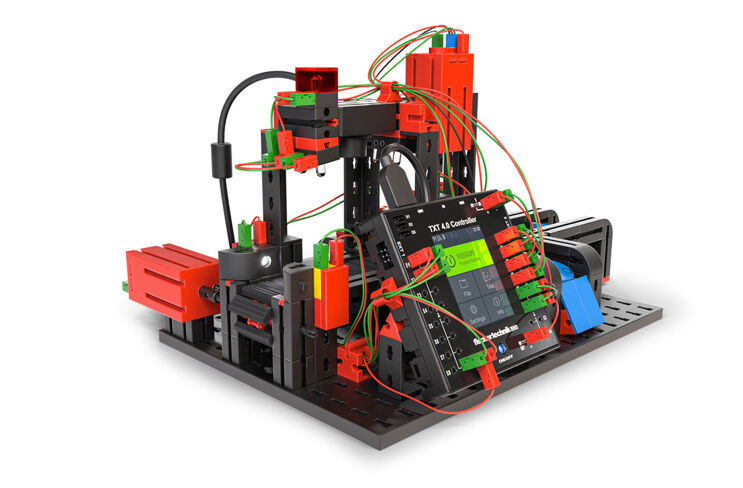

The TXT 4.0 makes it very easy for pupils to take their first steps with artificial intelligence (AI). They find out what is behind it and how they can use it for their own models.

In simple terms, AI means a program that behaves intelligently in some way. Depending on the task on hand, it can involve e.g. recognising images, translating texts or perhaps creating music.

There are many different types of AI. However, these days we usually mean so-called neuronal networks when we talk about AI. Put in simple terms, these are copies of the structures that we have in our brains. Neurons are cells that are connected to other cells, and when interconnected with other neurons, these networks are able to learn.

There are different types of such networks available for the different tasks that AI is to deal with – our language centre works in a different way from our pictorial memory, for example. And that’s no different with AI. There are different types of networks here, too, and suitable networks must be used for the task on hand.

How does a neuronal network work?

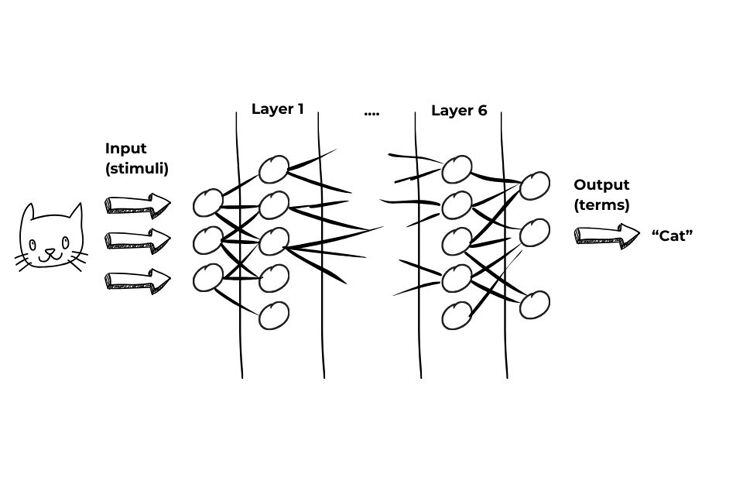

The following illustration describes the basic structure of a network.

The signals arrive on the left-hand side (input layer) – this is comparable with our sensory cells. The sensory cells are connected to neurons, and the strength of the connection indicates how strongly a neuron reacts to the stimuli from the left.

On the right-hand side (output layer) we can see the output neurons – put simply, these stand for certain terms which were perceived by the senses on the left-hand side. If we “see” an image on the left-hand side, for example, the term “cat” could be associated on the right.

Between the sensory cells on the left and the output neurons on the right, there are structures made up of many neurons. These are usually arranged in individual “layers” and every layer has a certain task or function.

The division of the nodes of an artificial neuronal network into layers is for reasons of clarity. We talk about the input layer, hidden layer and output layer.

There are different types of links for different tasks. Certain links are suitable for recognising parts in images, and others are good at recognising sequences in time, such as words in a sentence.

Terms such as deep learning, deep mind or the program DeepL, which translates text to another language, refer to the structure of the neuronal network – a so-called “deep” network. The deep. Deep simply means that there are many layers in succession, with each layer reacting to the outputs of the previous layer.

In simple terms, learning in a neuronal network works by stimuli e.g. images being set up on the left-hand side and then seeing whether the right term arises on the right-hand side. If this is not the case, the strength of the connections is adapted from right to left and a new attempt is made; as long as necessary until the network reacts correctly.

The constant processing of a large network is very time-consuming. This is why tasks for AI are trained at the PC and not on the TXT 4.0.

Work on artificial intelligence has been carried out for many decades. For a long time, however, computers and networks were not powerful enough to train large neuronal networks. For a network to be trained from scratch so that it can recognise objects, millions of images are required, which the network processes constantly from left to right and then corrects again from right to left.

For AI to be able to do anything, it has to learn first and then apply what it has learned later. The basic principle always works according to the following three steps:

1. Collection of training data – examples are collected which the AI uses for learning later. This can be e.g. images of different animals or objects. We then have to name these images so that the AI knows what it has to learn.

2. Training the AI – in this step we show the AI our examples and what we mean by them. Then the AI adapts in such a way that it is able to find the respective term to a given image as well as possible.

3. Use of AI – once training has been completed, we can use the network and try to recognise new or other images.

We will quickly realise that the training is not as easy as you might think. You need the right images and plenty of them, and the light conditions have to be right. But this should be found out by exploring and experimenting with the models themselves.

To stop training of the AI being too difficult, we use a pre-trained AI as a basis with our examples. This is able to recognise objects and only has to be taught the “names” of the things it is expected to recognise. This type of learning is called transfer learning.

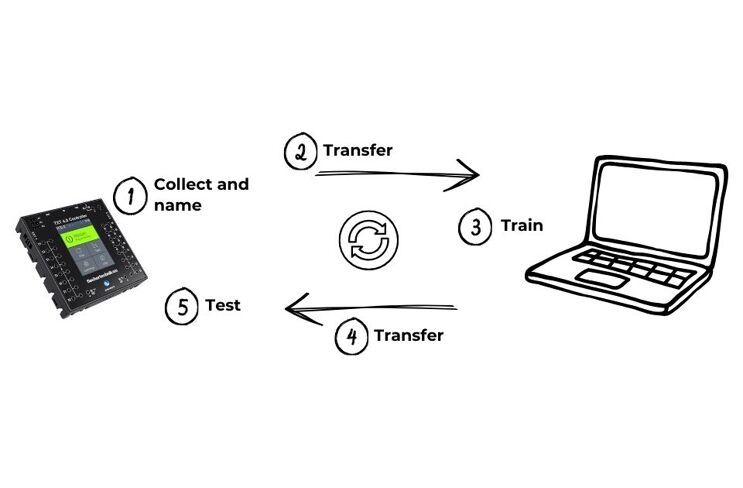

With our experiments with the TXT we always proceed as follows: We use the model to collect images for learning. For this, we have to write a program that records images and divides them into groups

- with a barrier, for example, in image groups which are to control the closing and opening of the barrier. The program then collects all the images on the TXT and names them accordingly.

Although the TXT 4.0 is a powerful controller, training AI requires somewhat more computing power. For this reason, we transfer the data to the PC or laptop and carry out the training there. After only a few minutes, the trained AI model can be transferred to the TXT.

Now we can use the AI with a different program on the TXT.

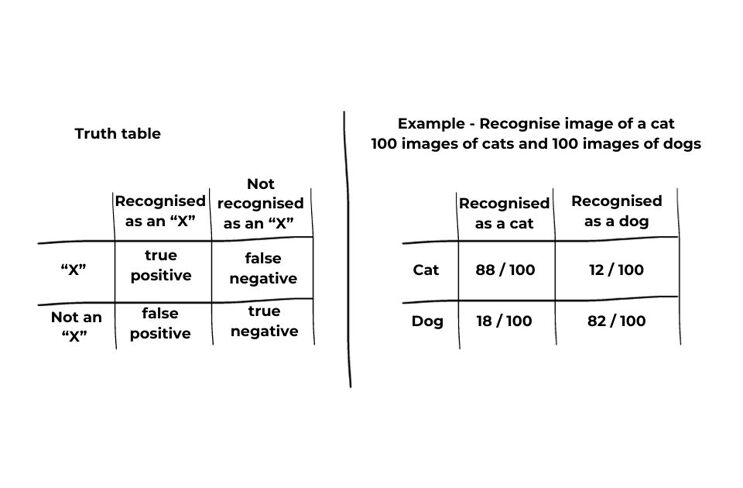

In order to evaluate how good AI is in recognising things, a truth table is typically used. For this, the AI is only shown objects of one type and then a count is taken of how many were recognised correctly. These are the so-called “true positives”. The objects not recognised correctly are described as the “false negatives”.

Both numbers are divided by the quantity of objects shown and a ratio is obtained as a result.

The nearer to 1 the first two values are and the nearer to 0 the second two, the more reliably your AI has recognised the objects correctly.

The development of AI over the past few years is fascinating and unprecedented. From self-driving cars, voice recognition, film suggestions on video streaming platforms through to personalised advertisements, IA is now used in almost all areas of our lives.

In his novel "Gulliver’s Travels" written as long ago as 1726, Jonathan Swift described a machine named “Engine”, similar to a computer, which is used to extend knowledge and improve mechanical workflows.

However, the 1950s are regarded as the decade in which the first meaningful breakthroughs were made in the possibilities of creating intelligent machines.

• The development of the first chess-playing computer programs (1950 Claude Shannon,1952 Arthur Samuel).

• In 1950, Alan Turing published his paper on “Computing Machinery and Intelligence” in which he suggested the “Turing Test” to test the ability of a machine to think like a human being.

• The development of the first AI computer program The Logic Theorist (1955 - Allen Newell, Herbert Simon, Cliff Shaw).

• In 1955, John McCarthy, an American computer scientist, and his team suggested a workshop on “artificial intelligence”, which led to the term “artificial intelligence” being coined at a conference at Dartmouth College in 1956.

At the end of the 1960s the program “ELIZA” was developed (by Joseph Weizenbaum), a kind of chat bot which was tested for the first time in a simulated doctor-patient consultation. Later the knowledge gained from ELIZA was integrated in so-called “expert systems”. Edward H. Shortliffe developed MYCIN, an expert system designed to support diagnosis decisions made by doctors.

There were developments that shaped the future of AI in the 1980s, too. A milestone was reached in 1984 with the development of the robot RB5X. This robot’s self-learning software makes it possible to predict future results on the basis of historic data. NETtalk 1986 (Terrence Joseph Sejnowski, Charles Rosenberg) was one of the first programs to use artificial neuronal networks. NETtalk can read words and pronounce them correctly, as well as apply what it has learned to words it is not familiar with.

In the 1990s, first algorithms were developed which made it possible for systems to make decisions automatically and solve problems by accessing saved data and information. In 1997 the AI chess machine “Deep Blue” built by IBM beat the reigning world chess champion, Garry Kasparov, at a tournament. This is considered a historic success of machines in an area dominated by humans up to this point. However, critics point out that “Deep Blue” did not win due to cognitive intelligence, but rather by simply calculating all the conceivable moves.

In 2016, the system “AlphaGo” beat the South Korean Lee Sedol, one of the world’s best Go players. Technological leaps in hardware and software paved the way for Artificial Intelligence to enter our day-to-day life. Voice assistants in particular are extremely popular: Apple’s “Siri” was launched on the market in 2011, in 2014 Microsoft presented its “Cortana” software and Amazon introduced Amazon Echo with its voice assistant “Alexa” in 2015. In a business environment, AI development is becoming manifest in the form of automation, deep learning and the Internet of Things. Alongside industrial robots, more and more service robots are being developed.

In 2020, a new decade dawned for AI. OpenAI has developed a series of pioneering AI models over the past years, including GPT-3, GPT-4, DALL-E and GLIDE. These models show that AI is able to solve complex tasks such as text generation, software programming and image processing.

Despite decades of research, the development of Artificial Intelligence has really only just started. Possible measures to regulate AI are becoming more and more important as well. To be able to be used in sensitive areas such as automated driving or medicine, it has to become more reliable and secure against manipulation.

The European Union’s AI law requires transparency for AI systems in broad areas so that people can understand how AI thinks.